SIGGRAPH Emerging Technologies

2025

Program Chair:

Nathan Matsuda

After many years attending, publishing, reviewing, and demoing at SIGGRAPH, I volunteered to serve on the 2025 conference committee as Emerging Technologies program chair. Working with my fellow Experience Hall program chairs over a little more than a year and a half, we brought in a global group of contributors whose work spanned SIGGRAPH topics like robotics, haptics and interaction, sensing, fabrication, and display technologies. During the conference in Vancouver, Canada, over 12,000 attendees had the opportunity to try these new technologies first-hand alongside art installations, immersive experiences, educational courses, trade show booths, technical papers and posters, and film production behind-the-scenes talks. This conference has long been the center of my professional community, and it was very rewarding to devote my energy to making this year's gathering the best it could be.

Multisource Holography

2023

Grace Kuo,

Florian Schiffers,

Douglas Lanman,

Oliver Cossairt,

and Nathan Matsuda

A major challenge in producing a high-quality holographic display is the amount of noise in the image introduced by speckle - interference between the uncontrolled light paths in the system. This concept by Grace Kuo optimizes a holograph for multiple, closely spaced laser sources that together average out some of this noise. The result is comparable to showing multiple holograms in quick succession and letting the eye average out the speckle, except this approach works in a single frame, and important capability for immersive displays.

Perspective-Correct VR Passthrough Without Reprojection

2023

Grace Kuo,

Eric Penner,

Seth Moczydlowski,

Douglas Lanman,

and Nathan Matsuda

The passthrough cameras on VR headsets cannot possibly be in the same position as the user's actual eyes, so the view of the outside world shown the user always has the wrong perspective. Current headsets use a warping operation to try to move pixels to the perspective-correct location, but this doesn't work very well. Our paper introduces an optical approach to capture the perspective at the user’s eye, using an array of lenses with an aperture behind each lens that only pass only the light rays that would have gone into the eye. We presented this technical paper at SIGGRAPH 2023, along with live demos in the Emerging Technologies program, where we won Best in Show for the conference.

McDonald's - Twelve Year Old Me (ft. Karen X Cheng)

2023

Director:

José Norton

Agency: IW Group

Production Company: Picture North

VFX by: Nathan Matsuda

Agency: IW Group

Production Company: Picture North

VFX by: Nathan Matsuda

This Lunar New Years 2023 commercial for McDonald's is the first to feature Neural Radiance Field (NeRF) 3D captures, which were popularized on social media by the star of this spot, Karen X. Cheng. This visuals were provided by Luma.ai, but of course integrating this new technique into conventional shot footage required conventional visual effects, so my long-time collaborators at Picture North asked me to step in for preproduction consultation, post-production workflow development, compositing, and general problem solving.

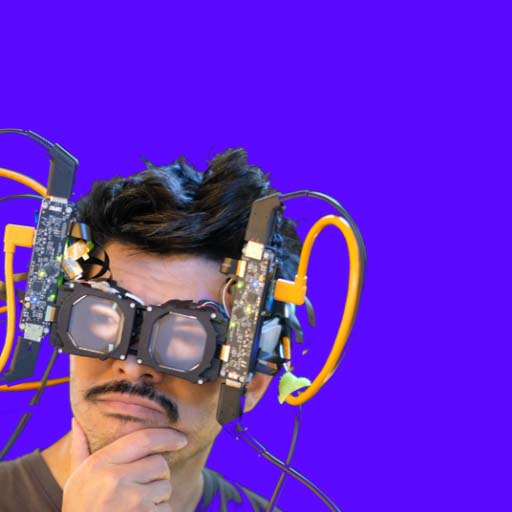

Hands-On with Meta's New VR Headset Prototypes - Adam Savage's Tested

2022

Presenter:

Norm Chan

Featuring: Mark Zuckerberg, Douglas Lanman, Yang Zhao, Phil Guan, and Nathan Matsuda

Featuring: Mark Zuckerberg, Douglas Lanman, Yang Zhao, Phil Guan, and Nathan Matsuda

In May 2022, Norm Chan from tested.com was the first media visitor to our normally-closed labs. Several of my colleagues and I showed Norm the prototypes we were working on and chatted about our work to more fully realize the potential of virtual reality display technology. In my section, I gave a demo of the Starburst project I'd led for the previous year and a half.

Realistic Luminance in VR

2022

Nathan Matsuda*,

Alexandre Chapiro*,

Yang Zhao,

Clinton Smith,

Romain Bachy,

and Douglas Lanman

VR headsets are quite dim compared to the real world, but just how much does this limit the immersiveness of VR experiences? We started to answer this complex question by conducting a user study using our 20,000 nit (about as bright as a 60W light bulb) Starburst VR headset that presents participants with a series of calibrated natural scenes at varying peak luminances. We found that people that people's luminance preferences are correlated with the true measured luminance of a scene, and that people innately pick luminances with satistically significant differences between indoor and outdoor scenes. These findings move our understanding of these luminance preferences beyond television and cinema settings, and into immersive VR, for the first time.

Press:

UploadVR

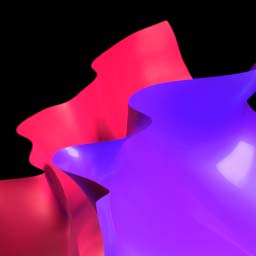

Enjoy The Silence

2022

Creative Director:

GMUNK

Production Company: Psyop

Production Company: Psyop

After designing and building our high dynamic range Starburst headset, we wanted to explore what a visual artist would use this expanded capability, and give people a more complete and exciting showcase of HDR VR capbilities. To do this, we turned to GMUNK, a widely accomplished artist working across photography, digital media, physical installations, and immersive experiences. Along with a small team of technical directors, GMUNK produced a series of three ambient audiovisual environments that walk the viewer through increasingly vivid sights and sounds. We brought this experience to the SIGGRAPH 2022 Emerging Technologies program, where it won best in show.

The Visual Turing Test

2022

Director:

Lake Buckley

Agency: SpecialGuest

Production Company: SpecialGuest

Featuring: Douglas Lanman, Phil Guan, Marina Zannoli, Andrew Maimone, and Nathan Matsuda

Agency: SpecialGuest

Production Company: SpecialGuest

Featuring: Douglas Lanman, Phil Guan, Marina Zannoli, Andrew Maimone, and Nathan Matsuda

This video provides a quick summary of the work that my team at Meta, Display Systems Research, conducted in its first 4 years, under the direction of Douglas Lanman. Shot over the course of one weekend at our office in Redmond, WA, this spot showcases a number of the hardware prototypes we have built, including varifocal VR demonstrators, a distortion simulator for perceptual studies, diffractive viewing optics demonstrators, and the HDR VR demonstrator project that I led.

Mirror Lake Concept

2022

Presenter:

Michael Abrash

Mechanical Engineering: Ryan Ebert

Animation & Rendering: Nathan Matsuda

Mechanical Engineering: Ryan Ebert

Animation & Rendering: Nathan Matsuda

I worked on this concept as an internal direction-setting exercise with Doug, Ryan, and others on the team to think about how our individual projects would eventually come together. Michael Abrash presented the idea in conversation with Mark Zuckerberg during their opening remarks for the 2022 Inside The Lab review of our work.

Reverse Passthrough VR

2021

Nathan Matsuda,

Brian Wheelwright,

Joel Hegland,

and Douglas Lanman

With recent passthrough-enabled VR headsets it's possible to carry on a conversation with another person in the room while wearing a headset since you can see them and perceive all of the usual social cues like eye contact. The person does not get these cues, though, since your own face is blocked by the headset. After more than a year of rapid prototyping, we introduced the idea of showing the VR user's face with 3D displays on the front of the headset capable of showing a perspective-correct view of their eyes. I presented this while wearing the prototype at a series of pandemic-era virtual conferences starting with SIGGRAPH 2021 Emerging Technologies, then later at DCExpo Japan, Optica Frontiers in Optics, and finally with a technical paper at SIGGRAPH Asia 2021.

viz1090 ADSB Visualizer

2021-2023

Nathan Matsuda

This ongoing project displays air traffic information captured via software defined radio and dump1090 on a draggable map. While many more practical, full-featured versions of this exist, such as FlightAware, I wanted to make something that could run on relatively low-power embedded devices, like a Raspberry Pi, and focus mainly on the aesthetics of the software.

Oculus Connect 6

2019

Presenter:

Michael Abrash

Mechanical Engineering: Ryan Ebert

Production Company: Zoic

CG Supervisor: Dave Funston

Creative Director: Nathan Matsuda

Mechanical Engineering: Ryan Ebert

Production Company: Zoic

CG Supervisor: Dave Funston

Creative Director: Nathan Matsuda

Michael Abrash presented our team's ongoing work on varifocal VR displays at the annual Oculus Connect developer conference. I worked with my former colleagues at Zoic Studios to produce an animated sequence showing exploded view and functional depictions of the varifocal display pod the team developed. These animations were featured in Michael's keynote talk as well as a corresponding blog post. I also took the photographs of the prototype headsets shown throughout the presentation.

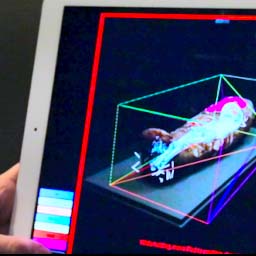

Paint The Eyes Softer: Mummy Portraits From Roman Egypt - The Block Museum of Art

2018

Essi Rönkkö,

Taco Terpstra,

Kyle Engelmann,

Nathan Matsuda,

Oliver Cossairt,

and Marc Walton

In the final few months of my time at Northwestern I had the opportunity to work with the Block Museum of Art on an exhibition showcasing new research on late Roman portrait mummies. The centerpiece of the exhibit was new tomographic reconstructions of the interior of a mummy from 100 CE, which we presented via an iPad-based AR app. I led the development of this app, working with intern Kyle Engelmann to optimize the heavy tomographic geometry for real time display, then then-new ARKit tools to overlay this model on the actual mummy when the app was used in the exhibition space.

Focal Surface Displays

2017

Nathan Matsuda,

Alexander Fix,

and Douglas Lanman

My first project with Meta (then Oculus) began as part of the very first class of summer interns at the company. My manager, Douglas Lanman, was starting to looking at was to build headset display systems that support accommodation, or the human visual systems innate focusing mechanisms. One approach that we discussed, and that turned into this paper, was to vary the focus across the field of view by computationally creating a freeform lens and correcting for the distortions this surface produces on the image.

Doctor Strange - End Credits

2016

Creative Director:

Erin Sarofsky

VFX Supervisor: Matthew Crnich

Production Company: Sarofsky

Motion Designers: Andrez Aguayo, Brent Austin, Chris Beers, Patrick Coleman, Jon Jamison, Alex Kline, Zach Landua, Jeff McBride, Anthony Morrelle, Dan Tiffany, and Nathan Matsuda

VFX Supervisor: Matthew Crnich

Production Company: Sarofsky

Motion Designers: Andrez Aguayo, Brent Austin, Chris Beers, Patrick Coleman, Jon Jamison, Alex Kline, Zach Landua, Jeff McBride, Anthony Morrelle, Dan Tiffany, and Nathan Matsuda

This was a fun summer project where I did look development and compositing with the team at Sarofsky.

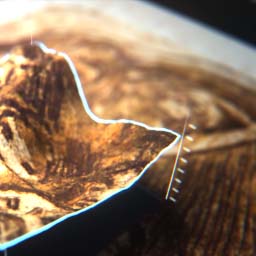

Surface Shape Studies Of The Art Of Paul Gauguin

2015

Oliver Cossairt,

Xiang Huang,

Nathan Matsuda,

Harriet Stratis,

Mary Broadway,

Jack Tumblin,

Greg Bearman,

Eric Doehne,

Aggelos Katsaggelos,

and Marc Walton

Some of the most interesting projects I was involved with in grad school were collaborations with Marc Walton's Center for Scientific Studies in the Arts at Northwestern. In this project, we looked at applying computational photography techniques to assist conservationists at the Art Institute of Chicago in evaluating Paul Gauigin block prints. In addition to this paper, some of these results were included in a Gaugin retrospective at the museum later that year.

Motion Contrast 3D Scanning

2015

Nathan Matsuda,

Mohit Gupta,

and Oliver Cosairt

This was one of two main projects I worked on during grad school. The idea is that asynchronous event sensors, which fire off individual detection events asynchronously for each pixel on the sensor (rather than capturing an entire image of intensities at once), can optimally smaple a laser spot scanning around in a scene, the most well established way of doing 3D scanning. Conventional 3D scans of this type take minutes or hours to capture, whereas the lab prototype we build could complete one scan in a matter of milliseconds, enabling 3D video capture. On top of that, the approach was very robust to bright ambient light and reflective surfaces, both of which stymie other real-time 3D capture approaches.

The Modern Ocean - Preproduction VFX Test

2014

Director:

Shane Carruth

Production Company: VFX Legion

3D Graphics: Dave Funston

Compositing: Nathan Matsuda

Production Company: VFX Legion

3D Graphics: Dave Funston

Compositing: Nathan Matsuda

After doing VFX on a short film starring Shane Carruth, the director reached out to brainstorm VFX for his upcoming (since shelved) project 'The Modern Ocean'. Along with my former Zoic colleague Dave Funston, I put together a couple of fully CG ship tests.

Once Upon A Time

2011-2013

Creative Director:

Andrew Orloff

Production Company: Zoic

Compositing Supervisor: Nathan Matsuda

Production Company: Zoic

Compositing Supervisor: Nathan Matsuda

I spent several years of my life immersed in developing the fantastical characters and environments as compositing supervisor for the ABC show Once Upon A Time (and was nominated for a Primetime Emmy as a result). During the show run we pioneered virtual production techniques that are now commonplace, regularly delivering episodes with hundreds of shots on a two week cadence. At the time, this was the highest volume of VFX ever produced for a television series.

V

2011

Creative Director:

Andrew Orloff

Production Company: Zoic

Lead Compositor: Nathan Matsuda

Production Company: Zoic

Lead Compositor: Nathan Matsuda

ABC's remake of the alien invasion show V was the first big virtual production project at Zoic and my introduction to sequence supervision.

Car Crash Flashback Sequence - Fast & Furious

2009

Production Company:

Zoic

VFX Supervisor: Chris Jones

Compositing: Arthur Argote and Nathan Matsuda

VFX Supervisor: Chris Jones

Compositing: Arthur Argote and Nathan Matsuda

My very first job after moving to LA was compositing on this hybrid flashback sequence showing a car crash unfolding around Vin Diesel, realized with a fully CG car.

@nathanmatsuda

@nathanmatsuda